A version of this article appears in the May 19 edition of Aviation Week & Space Technology, p. 59, Frank Morring, Jr.

A hyperspectral imager on the International Space Station (ISS) that was developed by the U.S. Navy as an experiment in littoral-warfare support is finding new life as an academic tool under NASA management, and already has drawn some seed money as a pathfinder for commercial Earth observation.

A hyperspectral imager on the International Space Station (ISS) that was developed by the U.S. Navy as an experiment in littoral-warfare support is finding new life as an academic tool under NASA management, and already has drawn some seed money as a pathfinder for commercial Earth observation.

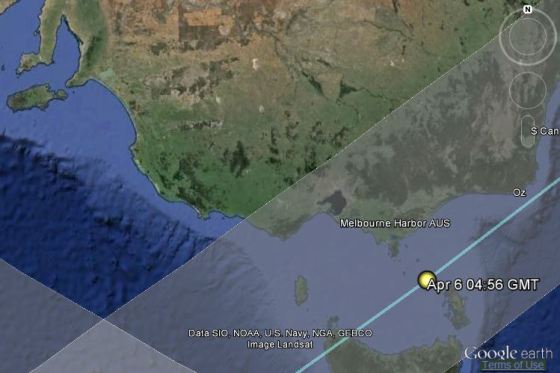

Facing Earth in open space on the Japanese Experiment Module’s porchlike Exposed Facility, the Hyperspectral Imager for Coastal Oceans (HICO) continues to return at least one image a day of near-shore waters with unprecedented spectral and spatial resolution.

HICO was built to provide a low-cost means to study the utility of hyperspectral imaging from orbit in meeting the Navy’s operational needs close to shore. Growing out of its experiences in the Persian Gulf and other shallow-water operations, the Office of Naval Research wanted to evaluate the utility of space-based hyperspectral imagery to characterize littoral waters and conduct bathymetry to track changes over time that could impact operations.

The Naval Research Laboratory (NRL) developed HICO, which was based on airborne hyperspectral imagery technology and off-the-shelf hardware to hold down costs. HICO was launched Sept. 10, 2009, on a Japanese H-2 transfer vehicle as part of the HICO and RAIDS (Remote Atmospheric and Ionospheric Detection System) Experimental Payloads; it returned its first image two weeks later.

In three years of Navy-funded operations, HICO “exceeded all its goals,” says Mary Kappus, coastal and ocean remote sensing branch head at NRL.

“In the past it was blue ocean stuff, and things have moved more toward interest in the coastal ocean,” she says. “It is a much more difficult environment. In the open ocean, multi-spectral was at least adequate.”

NASA, the U.S. partner on the ISS, took over HICO in January 2013 after Navy funding expired. The Navy also released almost all of the HICO data collected during its three years running the instrument. It has been posted for open access on the HICO website managed by Oregon State University.

While the Navy program was open to most researchers, the principal-investigator approach and the service’s multistep approval process made it laborious to gain access on the HICO instrument.

“[NASA] wanted it opened up, and we had to get permission from the Navy to put the historical data on there,” says Kappus. “So anything we collect now goes on there, and then we ask the Navy for permission to put old data on there. They reviewed [this] and approved releasing most of it.”

Under the new regime NRL still operates the HICO sensor, but through the NASA ISS payload office at Marshall Space Flight Center. This more-direct approach has given users access to more data and, depending on the target’s position relative to the station orbit, a chance to collect two images per day instead of one. Kappus explains that the data buffer on HICO is relatively small, so coordination with the downlink via the Payload Operations Center at Marshall is essential to collecting data before the buffer fills up.

Task orders are worked through the same channels. Presenting an update to HICO users in Silver Spring, Md., on May 7, Kappus said 171 of 332 total “scenes” targeted between Nov. 11, 2013, and March 12 were requested by researchers backed by the NRL and NASA; international researchers comprised the balance.

Data from HICO is posted on NASA’s Ocean Color website, where usage also is tracked. After the U.S., “China is the biggest user” of the website data, Kappus says, followed by Germany, Japan and Russia. The types of data sought, such as seasonal bathymetry that shows changes in the bottom of shallow waters, has remained the same through the transition from Navy to NASA.

“The same kinds of things are relevant for everybody; what is changing in the water,” she says.

HICO offers unprecedented detail from its perch on the ISS, providing 90-meter (295-ft.) resolution across wavelengths of 380-960 nanometers sampled at 5.7 nanometers. Sorting that rich dataset requires sophisticated software, typically custom-made and out of the reach of many users.

To expand the user set for HICO and future Earth-observing sensors on the space station, the Center for the Advancement of Science in Space, the non-profit set up by NASA to promote the commercial use of U.S. National Laboratory facilities on the ISS, awarded a $150,000 grant to HySpeed Computing, a Miami-based startup, and [Exelis] to demonstrate an online imaging processing system that can rapidly integrate new algorithms.

James Goodman, president/CEO of HySpeed, says the idea is to build a commercial way for users to process HICO data for their own needs at the same place online that they get it.

“Ideally a copy of this will [be] on the Oregon State server where the data resides,” Goodman says. “As a HICO user you would come in and say ‘I want to use this data, and I want to run this process.’ So you don’t need your own customized remote-sensing software. It expands it well beyond the research crowd that has invested in high-end remote-sensing software. It can be any-level user who has a web browser.”